Arista Networks - the Menlo Park, CA "cloud networking solutions" company - has announced the first competitor to Cisco's Nexus 1000V virtual, distributed switch for VMware's vSphere. Called simply vEOS (aka Virtualized Extensible Operating System) the virtual machine application integrates into the VMware vNetwork Distributed Switch (vNDS) framework to accomplish its task.

The current advantages posed by the vNDS architecture are preservation of a VM's network state across vMotion events and port configuration simplicity. Enhancements for the vEOS virtual machine will include QoS (with TX/RX limiting), ACL enforcement (when used with Arista's 7000 series switch family), CLI configuration and management, distributed port profiles, port profile inheritance, VMware port mirroring, SNMP v3 RW, syslog exports, active standby control plane, SSH/telnet access to CLI, "hitless" control plane upgrades, non-disruptive installation and integrated vSwitch upgrade workflow.

Another advantage of vEOS for adopters of Arista's 7000-series 10Gbase-T switches is its derrivation from the switch's own EOS code base. The EOS image is monolithic with respect to feature set, so there are no "trains to catch" for compatibility, et al. Michael Morris from NetworkWorld has an article about the vEOS announcement along with some additional information about Arista's plans and how to get your hands on a beta version of vEOS... See Arista's official press release about vEOS.

SOLORI's Take: While CNA's are great, 10Gbase-T provides a less "process disruptive" access to greater network bandwidth. Arista's move with vEOS is challenging both Cisco's presence in the VMware market and its vision for network convergence. Just like Ethernet won-out over "superior" technologies based on its simplicity, we're looking at 10Gbase-T's drop-in simplicity (CAT5e support, 100/1G/10G auto-negotiate, etc.) to drive obvious market share for related products. With the 2010 battle lines drawn in the virtualization platform market, the all-important network segment will be as much a scalability factor as it is a budgetary one.

When using the coming "massive" virtualization potential of 2P/4P hardware being released in 2010 (see our Quick Take on 48-core virtualization) the obvious conduit for network traffic into 180+ VM consolidations is 10GE. The question: will 10Gbase-T deliver order-of-magnitude economies of scale needed to displace the technical advantages of DCE/CNA-based networking?

Sunday, August 30, 2009

Wednesday, August 26, 2009

In Part 3 of this series we showed how to install and configure a basic NexentaStor VSA using iSCSI and NFS storage. We also created a CIFS bridge for managing ISO images that are available to our ESX servers using NFS. We now have a fully functional VSA with working iSCSI target (unmounted as of yet) and read-only NFS export mounted to the hardware host.

In this segment, Part 4, we will create an ESXi instance on NFS along with an ESX instance on iSCSI, and, using writable snapshots, turn both of these installations into quick-deploy templates. We'll then mount our large iSCSI target (created in Part 3) and NFS-based ISO images to all ESX/ESXi hosts (physical and virtual), and get ready to install our vCenter virtual machine.

With a lot of things behind us in Parts 1 through 3, we are going to pick-up the pace a bit. Although ZFS snapshots are immediately available in a hidden ".zfs" folder for each snapshotted file system, we are going to use cloning and mount the cloned file systems instead.

Cloning allows us to re-use a file system as a template for a copy-on-write variant of the source. By using the clone instead of the original, we can conserve storage because only the differences between the two file systems (the clone and the source) are stored to disk. This process allows us to save time as well, leveraging "clean installations" as starting points (templates) along with their associate storage (much like VMware's linked-clone technology for VDI.) While VMware's "template" capability allows us save time by using a VM as a "starting point" it does so by copying storage, not cloning it, and therefore conserves no storage.

[caption id="attachment_1094" align="aligncenter" width="402" caption="Using clones in NexentaStor to conserve storage and aid rapid deployment and testing. Only the differences between the source and the clone require additional storage on the NexentaStor appliance."] [/caption]

[/caption]

While the ESX and ESXi use cases might not seem the "perfect candidates" for cloning in a "production" environment, in the lab it allows for an abundance of possibilities in regression and isolation testing. In production you might find that NFS and iSCSI boot capabilities could make cloned hosts just as effective for deployment and backup as they are in the lab (but that's another blog).

Here's the process we will continue with for this part in the lab series:

[caption id="attachment_1095" align="aligncenter" width="450" caption="Basic storage architecture for the ESX-on-ESX lab."] [/caption]

[/caption]

In this segment, Part 4, we will create an ESXi instance on NFS along with an ESX instance on iSCSI, and, using writable snapshots, turn both of these installations into quick-deploy templates. We'll then mount our large iSCSI target (created in Part 3) and NFS-based ISO images to all ESX/ESXi hosts (physical and virtual), and get ready to install our vCenter virtual machine.

Part 4, Making an ESX Cluster-in-a-Box

With a lot of things behind us in Parts 1 through 3, we are going to pick-up the pace a bit. Although ZFS snapshots are immediately available in a hidden ".zfs" folder for each snapshotted file system, we are going to use cloning and mount the cloned file systems instead.

Cloning allows us to re-use a file system as a template for a copy-on-write variant of the source. By using the clone instead of the original, we can conserve storage because only the differences between the two file systems (the clone and the source) are stored to disk. This process allows us to save time as well, leveraging "clean installations" as starting points (templates) along with their associate storage (much like VMware's linked-clone technology for VDI.) While VMware's "template" capability allows us save time by using a VM as a "starting point" it does so by copying storage, not cloning it, and therefore conserves no storage.

[caption id="attachment_1094" align="aligncenter" width="402" caption="Using clones in NexentaStor to conserve storage and aid rapid deployment and testing. Only the differences between the source and the clone require additional storage on the NexentaStor appliance."]

[/caption]

[/caption]While the ESX and ESXi use cases might not seem the "perfect candidates" for cloning in a "production" environment, in the lab it allows for an abundance of possibilities in regression and isolation testing. In production you might find that NFS and iSCSI boot capabilities could make cloned hosts just as effective for deployment and backup as they are in the lab (but that's another blog).

Here's the process we will continue with for this part in the lab series:

- Create NFS folder in NexentaStor for the ESXi template and share via NFS;

- Modify the NFS folder properties in NexentaStor to:

- limit access to the hardware ESXi host only;

- grant the hardware ESXi host "root" access;

- Create a folder in NexentaStor for the ESX template and create a Zvol;

- From VI Client's "Add Storage..." function, we'll add the new NFS and iSCSI volumes to the Datastore;

- Create ESX and ESXi clean installations in these "template" volumes as a cloning source;

- Unmount the "template" volumes using the VI Client and unshare them in NexentaStore;

- Clone the "template" Zvol and NFS file systems using NexentaStore;

- Mount the clones with VI Client and complete the ESX and ESXi installations;

- Mount the main Zvol and ISO storage to ESX and ESXi as primary shared storage;

[caption id="attachment_1095" align="aligncenter" width="450" caption="Basic storage architecture for the ESX-on-ESX lab."]

[/caption]

[/caption]Quick Take: HP's Sets Another 48-core VMmark Milestone

Not satisfied with a landmark VMmark score that crossed the 30 tile mark for the first time, HP's performance team went back to the benches two weeks later and took another swing at the performance crown. Well, the effort paid off, and HP significantly out-paced their two-week-old record with a score of 53.73@35 tiles in the heavy weight, 48-core category.

Using the same 8-processor HP ProLiant DL785 G6 platform as in the previous run - complete with 2.8GHz AMD Opteron 8439 SE 6-core chips and 256GB DDR2/667 - the new score comes with significant performance bumps in the javaserver, mailserver and database results achieved by the same system configuration as the previous attempt - including the same ESX 4.0 version (164009). So what changed to add an additional 5 tiles to the team's run? It would appear that someone was unsatisfied with the storage configuration on the mailserver run.

Given that the tile ratio of the previous run ran about 6% higher than its 24-core counterpart, there may have been a small indication that untapped capacity was available. According to the run notes, the only reported changes to the test configuration - aside from the addition of the 5 LUNs and 5 clients needed to support the 5 additional tiles - was a notation indicating that the "data drive and backup drive for all mailserver VMs" we repartitioned using AutoPart v1.6.

The change in performance numbers effectively reduces the virtualization cost of the system by 15% to about $257/VM - closing-in on its 24-core sibling to within $10/VM and stretching-out its lead over "Dunnington" rivals to about $85/VM. While virtualization is not the primary application for 8P systems, this demonstrates that 48-core virtualization is definitely viable.

SOLORI's Take: HP's performance team has done a great job tuning its flagship AMD platform, demonstrating that platform performance is not just related to hertz or core-count but requires balanced tuning and performance all around. This improvement in system tuning demonstrates an 18% increase in incremental scalability - approaching within 3% of the 12-core to 24-core scaling factor, making it actually a viable consideration in the virtualization use case.

In recent discussions with AMD about the SR5690 chipset applications for Socket-F, AMD re-iterated that the mainstream focus for SR5690 has been Magny-Cours and the Q1/2010 launch. Given the close relationship between Istanbul and Magny-Cours - detailed nicely by Charlie Demerjian at Semi-Accurate - the bar is clearly fixed for 2P and 4P virtualization systems designed around these chips. Extrapolating from the similarities and improvements to I/O and memory bandwidth, we expect to see 2P VMmarks besting 32@23 and 4P scores over 54@39 from HP, AMD and Magny-Cours.

SOLORI's 2nd Take: Intel has been plugging away with its Nehalem-EX for 8-way systems and - delivering 128-threads - promises to deliver some insane VMmarks. Assuming Intel's EX scales as efficiently as AMD's new Opterons have, extrapolations indicate performance for the 4P, 64-thread Nehalem-EX shoud fall between 41@29 and 44@31 given the current crop of speed and performance bins. Using the same methods, our calculus predicts an 8P, 128-thread EX system should deliver scores between 64@45 and 74@52.

With EX expected to clock at 2.66GHz with 140W TDP and AMD's MCM-based Magny-Cours doing well to hit 130W ACP in the same speed bins, CIO's balancing power and performance considerations will need to break-out the spreadsheets to determine the winners here. With both systems running 4-channel DDR3, there will be no power or price advantage given on either side to memory differences: relative price-performance and power consumption of the CPU's will be major factors. Assuming our extrapolations are correct, we're looking at a slight edge to AMD in performance-per-watt in the 2P segment, and a significant advantage in the 4P segment.

Using the same 8-processor HP ProLiant DL785 G6 platform as in the previous run - complete with 2.8GHz AMD Opteron 8439 SE 6-core chips and 256GB DDR2/667 - the new score comes with significant performance bumps in the javaserver, mailserver and database results achieved by the same system configuration as the previous attempt - including the same ESX 4.0 version (164009). So what changed to add an additional 5 tiles to the team's run? It would appear that someone was unsatisfied with the storage configuration on the mailserver run.

Given that the tile ratio of the previous run ran about 6% higher than its 24-core counterpart, there may have been a small indication that untapped capacity was available. According to the run notes, the only reported changes to the test configuration - aside from the addition of the 5 LUNs and 5 clients needed to support the 5 additional tiles - was a notation indicating that the "data drive and backup drive for all mailserver VMs" we repartitioned using AutoPart v1.6.

The change in performance numbers effectively reduces the virtualization cost of the system by 15% to about $257/VM - closing-in on its 24-core sibling to within $10/VM and stretching-out its lead over "Dunnington" rivals to about $85/VM. While virtualization is not the primary application for 8P systems, this demonstrates that 48-core virtualization is definitely viable.

SOLORI's Take: HP's performance team has done a great job tuning its flagship AMD platform, demonstrating that platform performance is not just related to hertz or core-count but requires balanced tuning and performance all around. This improvement in system tuning demonstrates an 18% increase in incremental scalability - approaching within 3% of the 12-core to 24-core scaling factor, making it actually a viable consideration in the virtualization use case.

In recent discussions with AMD about the SR5690 chipset applications for Socket-F, AMD re-iterated that the mainstream focus for SR5690 has been Magny-Cours and the Q1/2010 launch. Given the close relationship between Istanbul and Magny-Cours - detailed nicely by Charlie Demerjian at Semi-Accurate - the bar is clearly fixed for 2P and 4P virtualization systems designed around these chips. Extrapolating from the similarities and improvements to I/O and memory bandwidth, we expect to see 2P VMmarks besting 32@23 and 4P scores over 54@39 from HP, AMD and Magny-Cours.

SOLORI's 2nd Take: Intel has been plugging away with its Nehalem-EX for 8-way systems and - delivering 128-threads - promises to deliver some insane VMmarks. Assuming Intel's EX scales as efficiently as AMD's new Opterons have, extrapolations indicate performance for the 4P, 64-thread Nehalem-EX shoud fall between 41@29 and 44@31 given the current crop of speed and performance bins. Using the same methods, our calculus predicts an 8P, 128-thread EX system should deliver scores between 64@45 and 74@52.

With EX expected to clock at 2.66GHz with 140W TDP and AMD's MCM-based Magny-Cours doing well to hit 130W ACP in the same speed bins, CIO's balancing power and performance considerations will need to break-out the spreadsheets to determine the winners here. With both systems running 4-channel DDR3, there will be no power or price advantage given on either side to memory differences: relative price-performance and power consumption of the CPU's will be major factors. Assuming our extrapolations are correct, we're looking at a slight edge to AMD in performance-per-watt in the 2P segment, and a significant advantage in the 4P segment.

Labels:

48-core,

AMD,

dunnington,

Ethics and Technology,

hp dl785 g6,

iommu,

istanbul,

magny-cours,

nehalem-ex,

opteron,

performance per watt,

Servers,

sr5690,

top vmmark score,

Virtualization,

vmmark,

VMWare,

xeon

Thursday, August 20, 2009

In Part 2 of this series we introduced the storage architecture that we would use for the foundation of our "shared storage" necessary to allow vMotion to do its magic. As we have chosen NexentaStor for our VSA storage platform, we have the choice of either NFS or iSCSI as the storage backing.

In Part 3 of this series we will install NexentaStor, make some file systems and discuss the advantages and disadvantages of NFS and iSCSI as the storage backing. By the end of this segment, we will have everything in place for the ESX and ESXi virtual machines we'll build in the next segment.

For DRAM memory, our lab system has 24GB of RAM which we will apportion as follows: 2GB overhead to host, 4GB to NexentaStor, 8GB to ESXi, and 8GB to ESX. This leaves 2GB that can be used to support a vCenter installation at the host level.

Our lab mule was configured with 2x250GB SATA II drives which have roughly 230GB each of VMFS partitioned storage. Subtracting 10% for overhead, the sum of our virtual disks will be limited to 415GB. Because of our relative size restrictions, we will try to maximize available storage while limiting our liability in case of disk failure. Therefore, we'll plan to put the ESXi server on drive "A" and the ESX server on drive "B" with the virtual disks of the VSA split across both "A" and "B" disks.

For lab use, a VSA with 4GB RAM and 1 vCPU will suffice. Additional vCPU's will only serve to limit CPU scheduling for our virtual ESX/ESXi servers, so we'll leave it at the minimum. Since we're splitting storage roughly equally across the disks, we note that an additional 4GB was taken-up on disk "A" during the installation of ESXi, therefore we'll place the VSA's definition and "boot" disk on disk "B" - otherwise, we'll interleave disk slices equally across both disks.

NOTE: It is important to realize here that the virtual disks above could have been provided by vmdk's on the same disk, vmdk's spread out across multiple disks or provided by RDM's mapped to raw SCSI drives. If your lab chassis has multiple hot-swap bays or even just generous internal storage, you might want to try providing NexentaStor with RDM's or 1-vmdk-per-disk vmdk's for performance testing or "near" production use. CPU, memory and storage are the basic elements of virtualization and there is no reason that storage must be the bottleneck. For instance, this environment is GREAT for testing SSD applications on a resource limited budget.

In Part 3 of this series we will install NexentaStor, make some file systems and discuss the advantages and disadvantages of NFS and iSCSI as the storage backing. By the end of this segment, we will have everything in place for the ESX and ESXi virtual machines we'll build in the next segment.

Part 3, Building the VSA

For DRAM memory, our lab system has 24GB of RAM which we will apportion as follows: 2GB overhead to host, 4GB to NexentaStor, 8GB to ESXi, and 8GB to ESX. This leaves 2GB that can be used to support a vCenter installation at the host level.

Our lab mule was configured with 2x250GB SATA II drives which have roughly 230GB each of VMFS partitioned storage. Subtracting 10% for overhead, the sum of our virtual disks will be limited to 415GB. Because of our relative size restrictions, we will try to maximize available storage while limiting our liability in case of disk failure. Therefore, we'll plan to put the ESXi server on drive "A" and the ESX server on drive "B" with the virtual disks of the VSA split across both "A" and "B" disks.

Our VSA Virtual Hardware

For lab use, a VSA with 4GB RAM and 1 vCPU will suffice. Additional vCPU's will only serve to limit CPU scheduling for our virtual ESX/ESXi servers, so we'll leave it at the minimum. Since we're splitting storage roughly equally across the disks, we note that an additional 4GB was taken-up on disk "A" during the installation of ESXi, therefore we'll place the VSA's definition and "boot" disk on disk "B" - otherwise, we'll interleave disk slices equally across both disks.

- Datastore - vLocalStor02B, 8GB vdisk size, thin provisioned, SCSI 0:0

- Guest Operating System - Solaris, Sun Solaris 10 (64-bit)

- Resource Allocation

- CPU Shares - Normal, no reservation

- Memory Shares - Normal, 4096MB reservation

- No floppy disk

- CD-ROM disk - mapped to ISO image of NexentaStor 2.1 EVAL, connect at power on enabled

- Network Adapters - Three total

- One to "VLAN1 Mgt NAT" and

- Two to "VLAN2000 vSAN"

- Additional Hard Disks - 6 total

- vLocalStor02A, 80GB vdisk, thick, SCSI 1:0, independent, persistent

- vLocalStor02B, 80GB vdisk, thick, SCSI 2:0, independent, persistent

- vLocalStor02A, 65GB vdisk, thick, SCSI 1:1, independent, persistent

- vLocalStor02B, 65GB vdisk, thick, SCSI 2:1, independent, persistent

- vLocalStor02A, 65GB vdisk, thick, SCSI 1:2, independent, persistent

- vLocalStor02B, 65GB vdisk, thick, SCSI 2:2, independent, persistent

NOTE: It is important to realize here that the virtual disks above could have been provided by vmdk's on the same disk, vmdk's spread out across multiple disks or provided by RDM's mapped to raw SCSI drives. If your lab chassis has multiple hot-swap bays or even just generous internal storage, you might want to try providing NexentaStor with RDM's or 1-vmdk-per-disk vmdk's for performance testing or "near" production use. CPU, memory and storage are the basic elements of virtualization and there is no reason that storage must be the bottleneck. For instance, this environment is GREAT for testing SSD applications on a resource limited budget.

Tuesday, August 18, 2009

In-the-Lab: Full ESX/vMotion Test Lab in a Box, Part 2

In Part 1 of this series we introduced the basic Lab-in-a-Box platform and outlined how it would be used to provide the three major components of a vMotion lab: (1) shared storage, (2) high speed network and (3) multiple ESX hosts. If you have followed along in your lab, you should now have an operating VMware ESXi 4 system with at least two drives and a properly configured network stack.

In Part 2 of this series we're going to deploy a Virtual Storage Appliance (VSA) based on an open storage platform which uses Sun's Zetabyte File System (ZFS) as its underpinnings. We've been working with Nexenta's NexentaStor SAN operating system for some time now and will use it - with its web-based volume management - instead of deploying OpenSolaris and creating storage manually.

To get started on the VSA, we want to identify some key features and concepts that caused us to choose NexentaStor over a myriad of other options. These are:

While the performance features of NexentaStor/ZFS are well outside the capabilities of an inexpensive "all-in-one-box" lab, the concepts behind them are important enough to touch on briefly. Once understood, the concepts behind ZFS make it a compelling architecture to use with virtualized workloads. Eric Sproul has a short slide deck on ZFS that's worth reviewing.

Legacy SAN architectures are typically split into two elements: cache and disks. While not always monolithic, the cache in legacy storage typically are single-purpose pools set aside to hold frequently accessed blocks of storage - allowing this information to be read/written from/to RAM instead of disk. Such caches are generally very expensive to expand (when possible) and may only accomodate one specific cache function (i.e. read or write, not both). Storage vendors employ many strategies to "predict" what information should stay in cache and how to manage it to effectively improve overall storage throughput.

[caption id="attachment_968" align="aligncenter" width="405" caption="New cache model used by ZFS allows main memory and fast SSDs to be used as read cache and write cache, reducing the need for large DRAM cache facilities."] [/caption]

[/caption]

In Part 2 of this series we're going to deploy a Virtual Storage Appliance (VSA) based on an open storage platform which uses Sun's Zetabyte File System (ZFS) as its underpinnings. We've been working with Nexenta's NexentaStor SAN operating system for some time now and will use it - with its web-based volume management - instead of deploying OpenSolaris and creating storage manually.

Part 2, Choosing a Virtual Storage Architecture

To get started on the VSA, we want to identify some key features and concepts that caused us to choose NexentaStor over a myriad of other options. These are:

- NexentaStor is based on open storage concepts and licensing;

- NexentaStor comes in a "free" developer's version with 4TB 2TB of managed storage;

- NexentaStor developer's version includes snapshots, replication, CIFS, NFS and performance monitoring facilities;

- NexentaStor is available in a fully supported, commercially licensed variant with very affordable $/TB licensing costs;

- NexentaStor has proven extremely reliable and forgiving in the lab and in the field;

- Nexenta is a VMware Technology Alliance Partner with VMware-specific plug-ins (commercial product) that facilitate the production use of NexentaStor with little administrative input;

- Sun's ZFS (and hence NexentaStor) was designed for commodity hardware and makes good use of additional RAM for cache as well as SSD's for read and write caching;

- Sun's ZFS is designed to maximize end-to-end data integrity - a key point when ALL system components live in the storage domain (i.e. virtualized);

- Sun's ZFS employs several "simple but advanced" architectural concepts that maximize performance capabilities on commodity hardware: increasing IOPs and reducing latency;

While the performance features of NexentaStor/ZFS are well outside the capabilities of an inexpensive "all-in-one-box" lab, the concepts behind them are important enough to touch on briefly. Once understood, the concepts behind ZFS make it a compelling architecture to use with virtualized workloads. Eric Sproul has a short slide deck on ZFS that's worth reviewing.

ZFS and Cache - DRAM, Disks and SSD's

Legacy SAN architectures are typically split into two elements: cache and disks. While not always monolithic, the cache in legacy storage typically are single-purpose pools set aside to hold frequently accessed blocks of storage - allowing this information to be read/written from/to RAM instead of disk. Such caches are generally very expensive to expand (when possible) and may only accomodate one specific cache function (i.e. read or write, not both). Storage vendors employ many strategies to "predict" what information should stay in cache and how to manage it to effectively improve overall storage throughput.

[caption id="attachment_968" align="aligncenter" width="405" caption="New cache model used by ZFS allows main memory and fast SSDs to be used as read cache and write cache, reducing the need for large DRAM cache facilities."]

[/caption]

[/caption]

Labels:

alternative to freenas,

alternative to openfiler,

esx,

esxi,

In-the-Lab,

iscsi storage,

l2arc,

nexenta,

nexentastor,

open storage,

open storage for esx,

primer on nexentastor,

primer on vsphere,

Virtualization,

vmotion storage,

VMWare,

vmware technology partner,

vsphere,

zfs,

zil

Monday, August 17, 2009

In-the-Lab: Full ESX/vMotion Test Lab in a Box, Part 1

There are many features in vSphere worth exploring but to do so requires committing time, effort, testing, training and hardware resources. In this feature, we'll investigate a way - using your existing VMware facilities - to reduce the time, effort and hardware needed to test and train-up on vSphere's ESXi, ESX and vCenter components. We'll start with a single hardware server running VMware ESXi free as the "lab mule" and install everything we need on top of that system.

To get started, here are the major hardware and software items you will need to follow along:

For the hardware items to work, you'll need to check your system components against the VMware HCL and community supported hardware lists. For best results, always disable (in BIOS) or physically remove all unsupported or unused hardware- this includes communication ports, USB, software RAID, etc. Doing so will reduce potential hardware conflicts from unsupported devices.

We're first going to install VMware ESXi 4.0 on the "test mule" and configure the local storage for maximum use. Next, we'll create three (3) machines two create our "virtual testing lab" - deploying ESX, ESXi and NexentaStor running directly on top of our ESXi "test mule." All subsequent tests VMs will be running in either of the virtualized ESX platforms from shared storage provided by the NexentaStor VSA.

[caption id="attachment_929" align="aligncenter" width="450" caption="ESX, ESXi and VSA running atop ESXi"] [/caption]

[/caption]

Next up, quick-and-easy install of ESXi to USB Flash...

Part 1, Getting Started

To get started, here are the major hardware and software items you will need to follow along:

Recommended Lab Hardware Components

Recommended Lab Hardware Components

- One 2P, 6-core AMD "Istanbul" Opteron system

- Two 500-1,500GB Hard Drives

- 24GB DDR2/800 Memory

- Four 1Gbps Ethernet Ports (4x1, 2x2 or 1x4)

- One 4GB SanDisk "Cruiser" USB Flash Drive

- Either of the following:

- One CD-ROM with VMware-VMvisor-Installer-4.0.0-164009.x86_64.iso burned to it

- An IP/KVM management card to export ISO images to the lab system from the network

Recommended Lab Software Components

- One ISO image of NexentaStor 2.x (for the Virtual Storage Appliance, VSA, component)

- One ISO image of ESX 4.0

- One ISO image of ESXi 4.0

- One ISO image of VCenter Server 4

- One ISO image of Windows Server 2003 STD (for vCenter installation and testing)

For the hardware items to work, you'll need to check your system components against the VMware HCL and community supported hardware lists. For best results, always disable (in BIOS) or physically remove all unsupported or unused hardware- this includes communication ports, USB, software RAID, etc. Doing so will reduce potential hardware conflicts from unsupported devices.

The Lab Setup

We're first going to install VMware ESXi 4.0 on the "test mule" and configure the local storage for maximum use. Next, we'll create three (3) machines two create our "virtual testing lab" - deploying ESX, ESXi and NexentaStor running directly on top of our ESXi "test mule." All subsequent tests VMs will be running in either of the virtualized ESX platforms from shared storage provided by the NexentaStor VSA.

[caption id="attachment_929" align="aligncenter" width="450" caption="ESX, ESXi and VSA running atop ESXi"]

[/caption]

[/caption]Next up, quick-and-easy install of ESXi to USB Flash...

Labels:

diy,

esx on esx,

esx on esxi,

esx server,

esxi on esxi,

In-the-Lab,

nexenta,

nexentastor,

primer on ESXi,

primer on vsphere,

test lab,

vcenter,

Virtualization,

virtualization in a box,

vmotion,

VMWare,

vmware lab,

VSA,

vsphere

Wednesday, August 12, 2009

Quick Take: HP Plants the Flag with 48-core VMmark Milestones

Following on the heels of last month we predicted that HP could easily claim the VMmark summit with its DL785 G6 using AMD's Istanbul processors:

Well, HP didn't make us wait too long. Today, the PC maker cleared two significant VMmark milestones: crossing the 30 tile barrier in a single system (180 VMs) and exceeding the 40 mark on VMmark score. With a score of 47.77@30 tiles, the HP DL785 G6 - powered by 8 AMD Istanbul 8439 SE processors and 256GB of DDR2/667 memory - set the bar well beyond the competition and does so with better performance than we expected - most likely due to AMD's "HT assist" technology increasing its scalability.

Not available until September 14, 2009, the HP DL785 G6 is a pricey competitor. We estimate - based on today's processor and memory prices - that a system as well appointed as the VMmark-configured version (additional NICs, HBA, etc) will run at least $54,000 or around $300/VM (about $60/VM higher than the 24-core contender and about $35/VM lower than HP's Dunnnigton "equivalent").

SOLORI's Take: While the September timing of the release might imply a G6 with AMD's SR5690 and IOMMU, we're doubtful that the timing is anything but a coincidence: even though such a pairing would enable PCIe 2.0 and highly effective 10Gbps solutions. The modular design of the DL785 series - with its ability to scale from 4P to 8P in the same system - mitigates the economic realities of the dwindling 8P segment, and HP has delivered the pinnacle of performance for this technology.

We are also impressed with HP's performance team and their ability to scale Shanghai to Istanbul with relative efficiency. Moving from DL785 G5 quad-core to DL785 G6 six-core was an almost perfect linear increase in capacity (95% of theoretical increase from 32-core to 48-core) while performance-per-tile increased by 6%. This further demonstrates the "home run" AMD has hit with Istanbul and underscores the excellent value proposition of Socket-F systems over the last several years.

Unfortunately, while they demonstrate a 91% scaling efficiency from 12-core to 24-core, HP and Istanbul have only achieved a 75% incremental scaling efficiency from 24-cores to 48-cores. When looking at tile-per-core scaling using the 8-core, 2P system as a baseline (1:1 tile-to-core ratio), 2P, 4P and 8P Istanbul deliver 91%, 83% and 62.5% efficiencies overall, respectively. However, compared to the %58 and 50% tile-to-core efficiencies of Dunnington 4P and 8P, respectively, Istanbul clearly dominates the 4P and 8P performance and price-performance landscape in 2009.

In today's age of virtualization-driven scale-out, SOLORI's calculus indicates that multi-socket solutions that deliver a tile-to-core ratio of less than 75% will not succeed (economically) in the virtualization use case in 2010, regardless of socket count. That said - even at a 2:3 tile-to-core ratio - the 8P, 48-core Istanbul will likely reign supreme as the VMmark heavy-weight champion of 2009.

SOLORI's 2nd Take: HP and AMD's achievements with this Istanbul system should be recognized before we usher-in the next wave of technology like Magny-Cours and Socket G34. While the DL785 G6 is not a game changer, its footnote in computing history may well be as a preview of what we can expect to see out of Magny-Cours in 2H/2010. If 12-core, 4P system price shrinks with the socket count we could be looking at a $150/VM price-point for a 4P system: now that would be a serious game changer.

If AMD’s Istanbul scales to 8-socket at least as efficiently as Dunnington, we should be seeing some 48-core results in the 43.8@30 tile range in the next month or so from HP’s 785 G6 with 8-AMD 8439 SE processors. You might ask: what virtualization applications scale to 48-cores when $/VM is doubled at the same time? We don’t have that answer, and judging by Intel and AMD’s scale-by-hub designs coming in 2010, that market will need to be created at the OEM level.

Well, HP didn't make us wait too long. Today, the PC maker cleared two significant VMmark milestones: crossing the 30 tile barrier in a single system (180 VMs) and exceeding the 40 mark on VMmark score. With a score of 47.77@30 tiles, the HP DL785 G6 - powered by 8 AMD Istanbul 8439 SE processors and 256GB of DDR2/667 memory - set the bar well beyond the competition and does so with better performance than we expected - most likely due to AMD's "HT assist" technology increasing its scalability.

Not available until September 14, 2009, the HP DL785 G6 is a pricey competitor. We estimate - based on today's processor and memory prices - that a system as well appointed as the VMmark-configured version (additional NICs, HBA, etc) will run at least $54,000 or around $300/VM (about $60/VM higher than the 24-core contender and about $35/VM lower than HP's Dunnnigton "equivalent").

SOLORI's Take: While the September timing of the release might imply a G6 with AMD's SR5690 and IOMMU, we're doubtful that the timing is anything but a coincidence: even though such a pairing would enable PCIe 2.0 and highly effective 10Gbps solutions. The modular design of the DL785 series - with its ability to scale from 4P to 8P in the same system - mitigates the economic realities of the dwindling 8P segment, and HP has delivered the pinnacle of performance for this technology.

We are also impressed with HP's performance team and their ability to scale Shanghai to Istanbul with relative efficiency. Moving from DL785 G5 quad-core to DL785 G6 six-core was an almost perfect linear increase in capacity (95% of theoretical increase from 32-core to 48-core) while performance-per-tile increased by 6%. This further demonstrates the "home run" AMD has hit with Istanbul and underscores the excellent value proposition of Socket-F systems over the last several years.

Unfortunately, while they demonstrate a 91% scaling efficiency from 12-core to 24-core, HP and Istanbul have only achieved a 75% incremental scaling efficiency from 24-cores to 48-cores. When looking at tile-per-core scaling using the 8-core, 2P system as a baseline (1:1 tile-to-core ratio), 2P, 4P and 8P Istanbul deliver 91%, 83% and 62.5% efficiencies overall, respectively. However, compared to the %58 and 50% tile-to-core efficiencies of Dunnington 4P and 8P, respectively, Istanbul clearly dominates the 4P and 8P performance and price-performance landscape in 2009.

In today's age of virtualization-driven scale-out, SOLORI's calculus indicates that multi-socket solutions that deliver a tile-to-core ratio of less than 75% will not succeed (economically) in the virtualization use case in 2010, regardless of socket count. That said - even at a 2:3 tile-to-core ratio - the 8P, 48-core Istanbul will likely reign supreme as the VMmark heavy-weight champion of 2009.

SOLORI's 2nd Take: HP and AMD's achievements with this Istanbul system should be recognized before we usher-in the next wave of technology like Magny-Cours and Socket G34. While the DL785 G6 is not a game changer, its footnote in computing history may well be as a preview of what we can expect to see out of Magny-Cours in 2H/2010. If 12-core, 4P system price shrinks with the socket count we could be looking at a $150/VM price-point for a 4P system: now that would be a serious game changer.

Labels:

24-core,

48-core,

AMD,

DL785 G6,

dunnington,

Ethics and Technology,

Intel,

istanbul,

New Products,

opteron,

Quick Take,

Servers,

sr5690,

top score,

Virtualization,

vmmark,

VMWare,

xeon

Monday, August 10, 2009

Quick Take: 6-core "Gulftown" Nehalem-EP Spotted, Tested

TechReport is reporting on a Taiwanese overclocker who may be testing a pair of Nehalem 6-core processors (2P) slated for release early in 2010. Likewise, AlienBabelTech mentions a Chinese website, HKEPC, that has preliminary testing completed on the desktop (1P) variant of the 6-core. While these could be different 32nm silicon parts, it is more likely - judging from the CPU-Z outputs and provided package pictures - that these are the same sample SKUs tested as 1P and 2P LGA-1366 components.

What does this mean for AMD and the only 6-core shipping today? Since Intel's still projecting Q2/2010 for the server part, AMD has a decent opportunity to grow market share for Istanbul. Intel's biggest rival will be itself - facing a wildly growing number of SKU's in across its i-line from i5, i7, i8 and i9 "families" with multiple speed and feature variants. Clearly, the non-HT version would stand as a direct competitor to Istanbul's native 6-core SKUs. Likewise, Istanbul may be no match for the 6-core Nehalem with HT and "turbo core" feature set.

What does this mean for AMD and the only 6-core shipping today? Since Intel's still projecting Q2/2010 for the server part, AMD has a decent opportunity to grow market share for Istanbul. Intel's biggest rival will be itself - facing a wildly growing number of SKU's in across its i-line from i5, i7, i8 and i9 "families" with multiple speed and feature variants. Clearly, the non-HT version would stand as a direct competitor to Istanbul's native 6-core SKUs. Likewise, Istanbul may be no match for the 6-core Nehalem with HT and "turbo core" feature set.

However, with an 8-core "Beckton" Nehalem variant on the horizon, it might be hard to understand just where the Gulftown fits in Intel's picture. Intel faces a serious production issue, filling fab capacity with 4-core, 6-core and 8-core processors, each with speed, power, socket and HT variants from which to supply high-speed, high-power SKUs and lower-speed, low-power SKUs for 1P, 2P and 4P+ destinations. Doing the simple math with 3 SKU's per part Intel would be offering the market a minimum of 18 base parts according to their current marketing strategy: 9 with HT/turbo, 9 without HT/turbo. For socket LGA-1366, this could easily mean 40+ SKUs with 1xQPI and 2xQPI variants included (up from 23).

SOLORI's take: Intel will have to create some interesting "crippling or pricing tricks" to keep Gulftown from canibalizing the Gainstown market. If they follow their "normal" play book, we prodict the next 10-months will play out like this:

It would be a mistake for Intel to continue growing SKU count or provide too much overlap between 4-core HT and 6-core non-HT offerings. If purchasing trends soften in 4Q/09 and remain (relatively) flat through 2Q/10, Intel will benefit from a leaner, well differentiated line-up. AMD has already announced a "leaner" plan for G34/C32. If all goes well at the fabs, 1H/2010 will be a good ole fashioned street fight between blue and green.

What does this mean for AMD and the only 6-core shipping today? Since Intel's still projecting Q2/2010 for the server part, AMD has a decent opportunity to grow market share for Istanbul. Intel's biggest rival will be itself - facing a wildly growing number of SKU's in across its i-line from i5, i7, i8 and i9 "families" with multiple speed and feature variants. Clearly, the non-HT version would stand as a direct competitor to Istanbul's native 6-core SKUs. Likewise, Istanbul may be no match for the 6-core Nehalem with HT and "turbo core" feature set.

What does this mean for AMD and the only 6-core shipping today? Since Intel's still projecting Q2/2010 for the server part, AMD has a decent opportunity to grow market share for Istanbul. Intel's biggest rival will be itself - facing a wildly growing number of SKU's in across its i-line from i5, i7, i8 and i9 "families" with multiple speed and feature variants. Clearly, the non-HT version would stand as a direct competitor to Istanbul's native 6-core SKUs. Likewise, Istanbul may be no match for the 6-core Nehalem with HT and "turbo core" feature set.However, with an 8-core "Beckton" Nehalem variant on the horizon, it might be hard to understand just where the Gulftown fits in Intel's picture. Intel faces a serious production issue, filling fab capacity with 4-core, 6-core and 8-core processors, each with speed, power, socket and HT variants from which to supply high-speed, high-power SKUs and lower-speed, low-power SKUs for 1P, 2P and 4P+ destinations. Doing the simple math with 3 SKU's per part Intel would be offering the market a minimum of 18 base parts according to their current marketing strategy: 9 with HT/turbo, 9 without HT/turbo. For socket LGA-1366, this could easily mean 40+ SKUs with 1xQPI and 2xQPI variants included (up from 23).

SOLORI's take: Intel will have to create some interesting "crippling or pricing tricks" to keep Gulftown from canibalizing the Gainstown market. If they follow their "normal" play book, we prodict the next 10-months will play out like this:

- Initially there will be no 8-core product for 1P and 2P systems (LGA-1366), allowing for artificially high margins on the 8-core EX chip (LGA-1567), slowing the enevitable canibalization of the 4-core/2P market, and easing production burdens;

- Intel will silently and abruptly kill Itanium in favor of "hyper-scale" Nehalem-EX variants;

- Gulftown will remain high-power (90-130W TDP) and be positioned against AMD's G34 systems and Magny-Cours - plotting 12-core against 12-thread;

- Intel creates a "socket refresh" (LGA-1566?) to enable "inexpensive" 2P-4P platforms from its Gulftown/Beckton line-up in 2H/2010 (ostensibly to maintain parity with G34) without hurting EX;

- Revised, lower-power variants of Gainstown will be positioned against AMD's C32 target market;

- Intel will cut SKUs in favor of higher margins, increasing speed and features for "same dollar" cost;

- Non-HT parts will begin to disappear in 4-core configurations completely;

- Intel's AES enhancements in Gulftown will allow it to further differentiate itself in storage and security markets;

It would be a mistake for Intel to continue growing SKU count or provide too much overlap between 4-core HT and 6-core non-HT offerings. If purchasing trends soften in 4Q/09 and remain (relatively) flat through 2Q/10, Intel will benefit from a leaner, well differentiated line-up. AMD has already announced a "leaner" plan for G34/C32. If all goes well at the fabs, 1H/2010 will be a good ole fashioned street fight between blue and green.

Labels:

6-core,

8 core,

AMD,

beckton,

c32,

g34,

gainstown,

gulftown,

Intel,

istanbul,

lga-1366,

lga-1567,

magny-cours,

nehalem-ep,

nehalem-ex,

New Products,

Quick Take,

Servers

Tuesday, August 4, 2009

Quick Take: DDR3 Prices on the Rise

In the current server-class arms race, Intel and AMD have secured separate quarters: Intel's rival QPI architecture coupled to a 3-channel DDR3 memory bus and functional hyper-threading cores (top bin parts) holds the pure performance sector; while AMD's improved Istanbul cores can be delivered 6 at a time and paired with inexpensive DDR2 memory to achieve better price-performance (acquisition). Both solutions deliver about the same economies in power consumption under virtualized loads.

In the current server-class arms race, Intel and AMD have secured separate quarters: Intel's rival QPI architecture coupled to a 3-channel DDR3 memory bus and functional hyper-threading cores (top bin parts) holds the pure performance sector; while AMD's improved Istanbul cores can be delivered 6 at a time and paired with inexpensive DDR2 memory to achieve better price-performance (acquisition). Both solutions deliver about the same economies in power consumption under virtualized loads.All in all, the Twin2 with Xeon L5520 CPUs is the best platform for those seeking an affordable server with an excellent performance/watt ratio at an affordable price. On the other hand, if performance/price is the most important criterion followed by performance/watt, we would probably opt for the six-core Opteron version of the Twin2. Supermicro has "a blade killer" avialable with the Twin², especially for those people who like to keep the hardware costs low.John De Gelas, AnantTech, July 22, 2009

[caption id="" align="alignright" width="335" caption="Global DDR2 and DDR3 Capacity"]

[/caption]

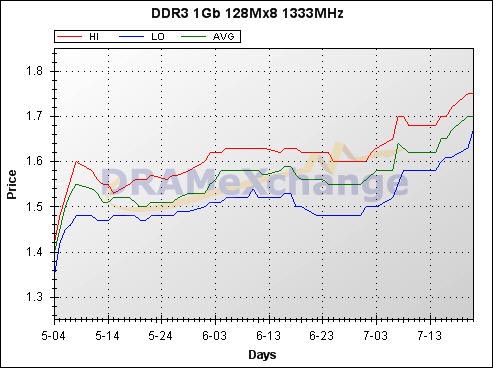

[/caption]Meanwhile, the cost differential between DDR3 and DDR2 continues to widen due to increased demand in the notebook sector and reduced supply (capacity). According to DRAMeXchange, the trend will continue into Q4/09 as suppliers are expected to commit up to 30% of capacity to DDR3 by that time.

At the same time, DDR3 prices continue to inch up, by 5% in July, while DDR2 prices have appeared to bottom-out. This trend in DDR3 pricing is consistent across all speed ratings (1066/1333/1600) and, despite artificial downward price pressure from Samsung, has managed to drift upward 20% since May, 2009.

[caption id="" align="alignright" width="311" caption="DDR3 Price Trend, May to August, 2009"]

[/caption]

[/caption]Because low-end, lower-priced 2GB DDR3/1066 ($60/stick) memory shows little advantage over 2GB DDR2/800 ($35/stick), the 70% price premium keeps DDR2 in demand. With the added economic pressures of the world economy and cautious growth outlook of manufacturing sector, the cross-over from DDR2 to DDR3 will come at a significant cost: either to the consumer or the supplier.

Until the cross-over, DDR2-based systems will continue to be a favorite in price sensitive applications (i.e. where total system cost plays a significant role in purchasing decisions.) As an example of this economic inequality, let's take the HP DL380 G6 and DL385 G6 as a comparison. Adding 16GB to the DL380 adds about $760 to the price tag (4x4GB DDR3-1066), while adding the same amount of memory to the DL385 adds only $410 (4x4GB DDR2-800). This comparison demonstrates an 85% price premium of DDR3 versus DDR2, a bit higher (percentage wise) than the desktop norm of 70%.

SOLORI's Take: While the cost of memory in desktop systems typically represents a small portion of the overall system cost, the same can not be said for virtualization systems where entry configurations weigh-in at 16GB and often run from 48GB to 72GB in "fully loaded" systems. This, as our calculus has shown, is where the sweet-spot of $/VM is delivered.

In such configurations, the cost of DDR3 memory can tripple the system cost ($6,370 for 2P, L5506 w/12x4GB DDR3-1066R vs. $5,201 for 2P 2427 w/12x4GB DDR2-800). Moving to the higher memory footprint in 2P systems is typically not cost effective because core count cannot keep-up with the memory needs of the virtual machine inventory. However, if it were possible to utilize additional memory in the 2P platform, our benchmark 8GB DDR3-1066 versus DDR2-667 price comparison tells another story. At $900/stick, the cost of 8GB DDR3 is still a 235% premium over 8GB DDR2, making 96GB DDR3 systems (2P Xeon w/HT) nearly $6,200 per server more costly than their DDR2 counterparts (2P Istanbul) based on memory pricing alone.

SOLORI's 2nd Take: We're hoping to see Tyan and Supermicro release SR5690 chipset-based systems - promised in Q3/2009 - to take advantage of this pricing trend and round-out the Istanbul offering before Q1/2010 ushers-in the next wave of multi-core systems. With 10G prices on the decline, we think today's virtualization applications make Istanbul+IOMMU a good price-performance and price-feature fit in the 32-64GB memory footprint space, leaving Nehalem-EP with only the performance niche to its credit. The only question is: where is SR5690?

Subscribe to:

Posts (Atom)